Red → Gold → Refactor

TDD for the AI era (and why “just make it green” is no longer enough).

Red-Green-Refactor was designed for humans writing code one careful line at a time. But AI doesn’t think in minimal changes – it can draft entire features at once.

So why are we still asking it to write the smallest possible implementation, and then acting surprised when it “helpfully” rewrites the whole file anyway?

Red-Gold-Refactor is TDD for the AI era.

This Ward goes deeper into what that means in practice: writing tests as high-quality prompts, defining "Gold Standard" implementations, and keeping humans accountable without vibe-coding into production fires.

If Ward #1 was the reframe, this is the “show me the loop”.

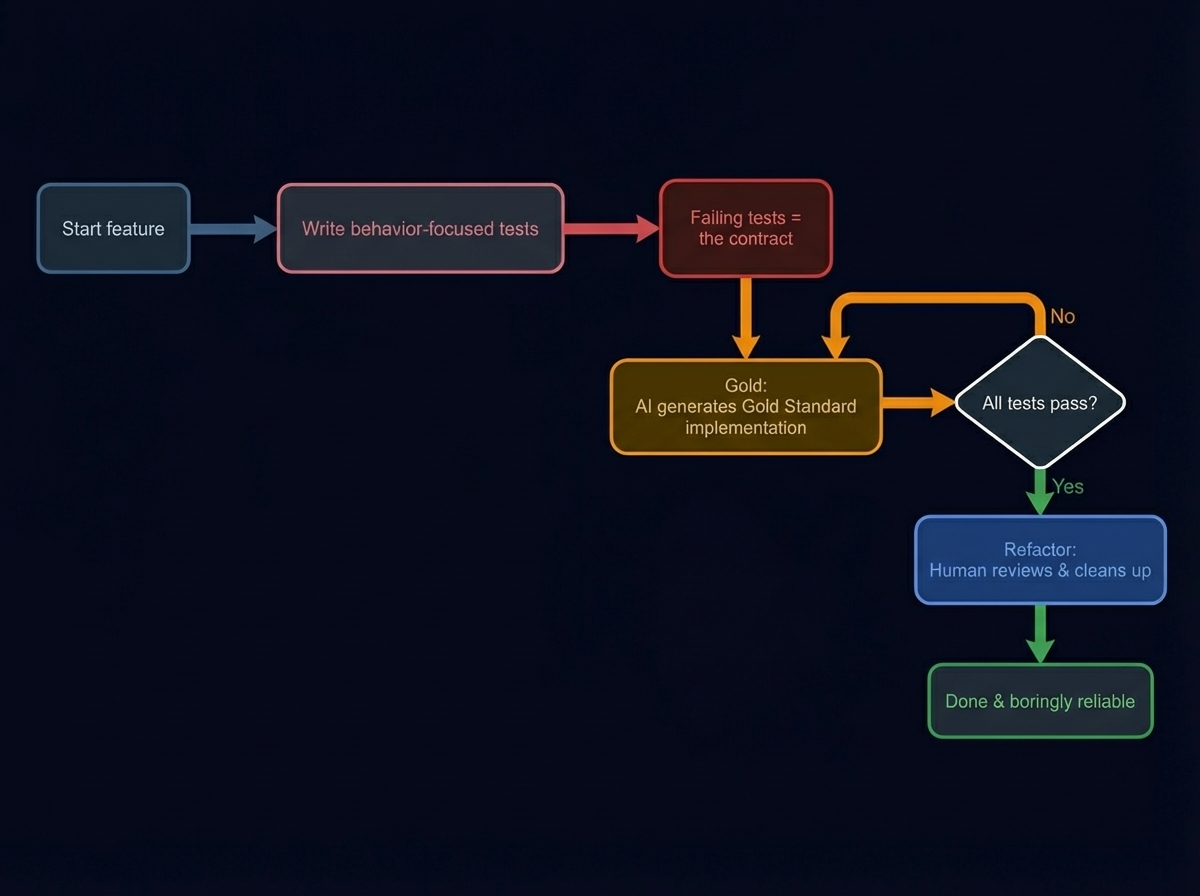

The Loop Visualized

Yes, it’s another diagram. No, it doesn’t require a SAFe certification to understand.

Instead of the classic Red-Green loop, GS-TDD introduces a Gold step that leverages AI's capability to generate complete, robust solutions upfront.

Why classic Red-Green-Refactor breaks down with AI

Classic TDD works beautifully when a human is typing every line, aiming for small, safe steps to avoid over-engineering.

- Red – Write a failing test

- Green – Write the smallest change to make it pass

- Refactor – Clean up

Now add an AI coding assistant. It doesn't get tired, it has huge working memory, and it wants to impress you with complete solutions.

What do most people do? They keep the old ritual:

- Write a small test (maybe)

- Ask the AI: “Make the test pass”

- Accept whatever “green” happens to look like

The result is code that technically passes tests but ignores performance, security, edge cases, or maintainability. "Make it green" is not enough when the author is a stochastic parrot with infinite energy and zero long-term responsibility.

Enter Gold: From smallest-possible to production-minded

In Gold Standard TDD (GS-TDD), the G stands for Gold:

Don’t ask the AI for the minimum implementation.

Ask for a Gold Standard implementation from the start.

A Gold Standard implementation is not perfect, but it is:

- Intentionally holistic for this context

- A serious first attempt at production-quality code

- Guided by clear tests and constraints, not vibes

Instead of "Just make this test pass," you say:

"Given these tests and constraints, generate a Gold Standard implementation suitable for production in this codebase."

Red: Tests as both spec and spell

With AI in the loop, your tests are no longer just regression safety nets. They are Specification, Prompt, and Contract.

Bad tests = bad contract = bad prompts. In other words: if your tests are vibes, your AI will be too.

Minimal, implementation-shaped test (Bad)

it("returns true for valid credentials", () => { const result = authenticateUser("john.doe@example.com", "Secret123"); expect(result).toBe(true); });

This tells the AI almost nothing. What is "valid"? Side effects? Security? This is technically a test. It is not a conversation.

BDD-style, AI-friendly test (Gold Standard)

it("authenticates a known user with correct credentials", async () => { /** * Given a registered user "john.doe@example.com" with a secure password * When they attempt to log in with the correct email and password * Then authentication should succeed * And their lastLoginAt timestamp should be updated * And a short-lived session token should be created and returned * And no sensitive data (like password hashes) should be exposed in the response */ const user = await createTestUser({ email: "john.doe@example.com", password: "Secret123", }); const result = await authenticateUser("john.doe@example.com", "Secret123"); expect(result.ok).toBe(true); expect(result.user.id).toBe(user.id); expect(result.user.lastLoginAt).toBeCloseToNow(); expect(result.sessionToken).toMatch(/^[A-Za-z0-9\-_]+$/); });

Now the AI has semantic anchors: "short-lived session token", "no sensitive data exposed", "timestamp updated". When you later feed this file to the AI, this test is pure signal.

This is basically BDD with an ulterior motive: you’re not just helping humans read the test, you’re feeding the AI a structured story it can’t pretend to misunderstand.

Gold: Asking the AI for more than green

Once you’ve got solid tests, the Gold step looks like this:

“Here are behavior-focused tests for

authenticateUser.

Please generate a Gold Standard implementation that:

- passes all tests

- is safe to use in production

- does not log or expose sensitive data

- separates transport, domain and persistence concerns

- is easy to extend later with MFA”

You’re not approving code because it “looks smart”. You’re approving code because it passes a good test suite, respects your constraints, and feels maintainable.

Refactor: Where humans earn their salary

Once Red and Gold are in place, you have working code. This is the Refactor step.

Here’s the trick: the AI can help here too – but you stay in charge.

- Say “no” when the AI starts to over-generalize.

- Push it back towards boringly reliable solutions.

- Keep an eye on readability, domain language, and team conventions.

If future-you opens this file in six months and quietly swears at past-you, the Refactor step was not finished.

The Accountability Line

AI: Responsible for drafts, proposals, and grunt work.

Human: Accountable for behavior, quality, and risk.

A small end-to-end example

Imagine a simple feature: “Users can reset their password via email.”

1. Red

You write BDD-style tests describing the given/when/then, security expectations, and what must not be observable to attackers.

2. Gold

You ask the AI: "Using only the tests above as the behavioral contract, implement a Gold Standard password reset flow. Requirements: Tokens must be single-use, never log tokens, API response must not reveal user existence..."

3. Refactor

You review the code like a senior dev reviewing a junior’s PR. You enforce your team’s style and boundaries.

How to start using Red → Gold → Refactor tomorrow

You don’t have to overhaul your entire process at once. Start tiny:

- Pick one small feature or bugfix.

- Write 2–4 BDD-ish tests that read like behavior descriptions.

- Explicitly tell the AI you want a Gold Standard implementation.

- Keep yourself accountable in Refactor: Would you sign your name under this?

GS-TDD is not about making AI “smarter”. It’s about putting enough structure around it that your AI assistant stops guessing, your team stops vibe-coding, and “boringly reliable” becomes the default outcome.

Next Ward, we’ll get concrete with prompt templates for Gold, patterns for AI-friendly tests, and applying this loop to frontends.

Don’t ask your AI to “just make it green”. Ask it to help you make it Gold.